This weekend we had a bout of bad weather. Our secondhand NOAA weather radio sounded off repeatedly, local weather broadcasters breathlessly reported rotational formations on television, iPhone's buzzed with emergency alerts, and city sirens sounded announcing a Tornado Warning. Each of these mechanisms bases their operation on the National Weather Service forecast office in Little Rock (LZK), a part of the National Oceanic and Atmospheric Administration.

NWS makes some of the most important information available through alerts which are broadcast over weather radio following the classic tone. Our weather radio listens for these tones and either blinks an alarm light, emits a siren, or tunes into the broadcast for a preset time before returning to ready mode. What if we could retrieve this information programmatically, and print it out on some sort continuous feed paper?

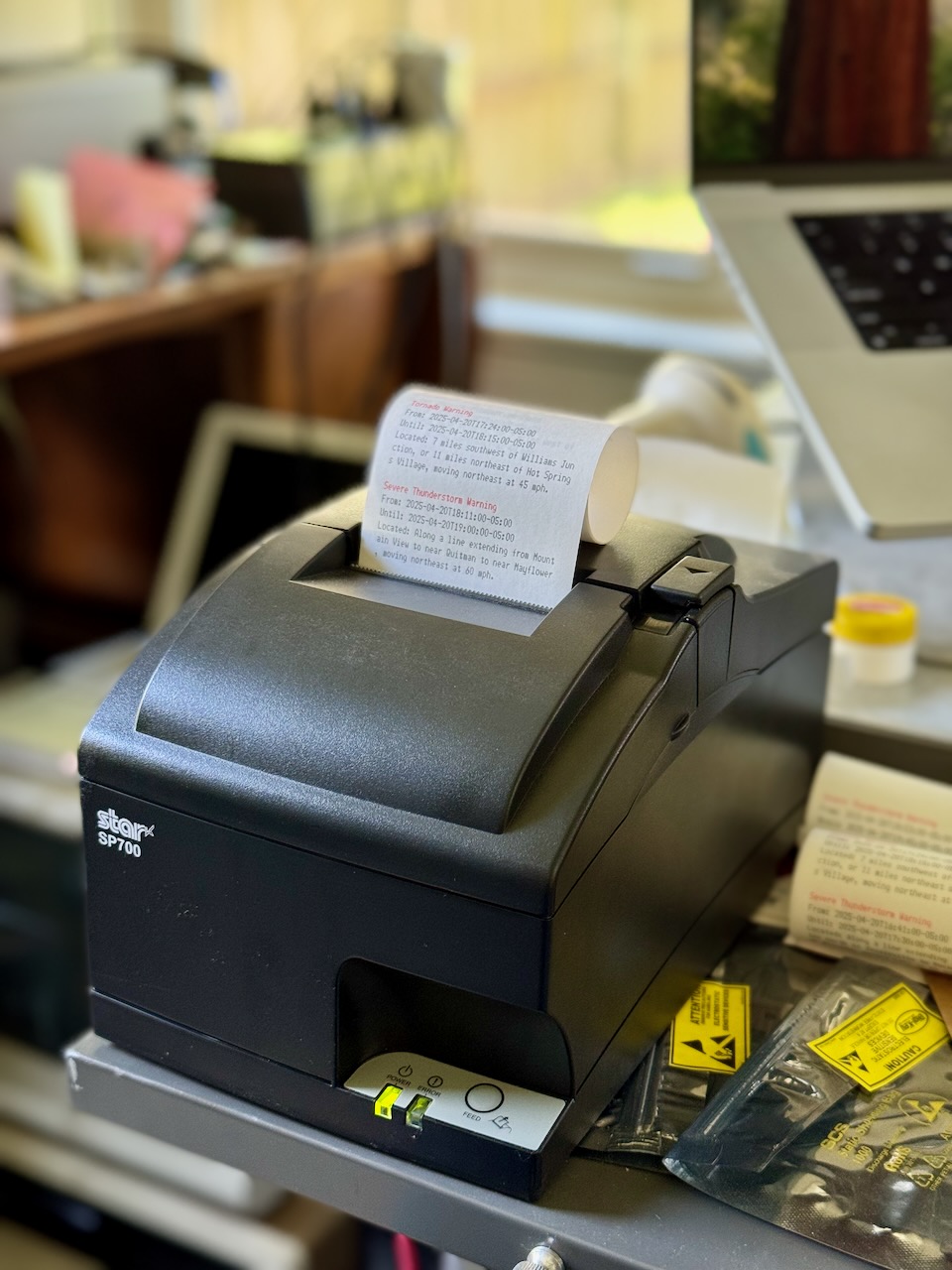

Star SP-700

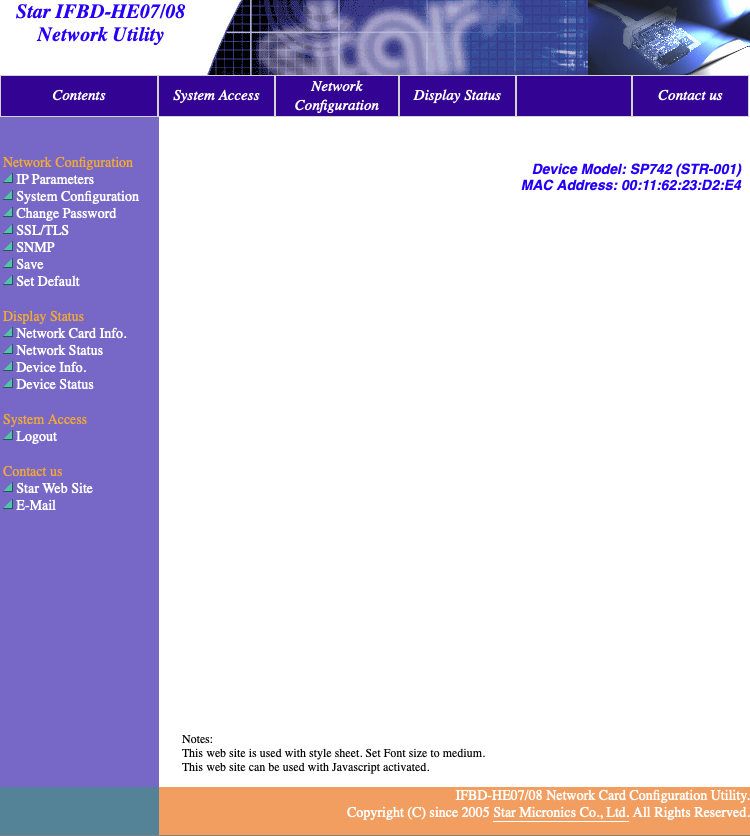

Months ago, I had stumbled upon a Star SP-700 high speed, two color, matrix printer at Goodwill; including the 10/100 Base-T interface module which allows for networking. The network interface provides an interactive web interface, support for DHCP, TLS, SNMP, FTP, and even telnet. And, because this is not a thermal receipt printer, there's no risk of BPS exposure.

A telnet configuration session:

; telnet star-sp700.home.arpa

Trying 10.0.3.21...

Connected to star-sp700.home.arpa.

Escape character is '^]'.

Welcome to IFBD-HE07/08 TELNET Utility.

Copyright(C) 2005 Star Micronics co., Ltd.

<< Connected Device >>

Device Model: SP742 (STR-001)

NIC Product : IFBD-HE07/08

MAC Address : 00:11:62:23:D2:E4

login: root

Password: ******

Hello root

=== Main Menu ===

1) IP Parameters Configuration

2) System Configuration

3) Change Password

5) SNMP

96) Display Status

97) Reset Settings to Defaults

98) Save & Restart

99) Quit

Enter Selection:

Like FTP, telnet support is surprisingly common on printer network cards, for example the HP LaserJet card. Another similarity, the Star SP-700 supports raw TCP/IP printing on port 9100, which in its case is plain ASCII text punctuated with control codes and using \r\n for line termination. The SCP700 Series Programmer's Manual enumerates the supported escape sequences in chapter nine. In my program, I used:

| Sequence | Description |

|---|---|

<ESC> "4" |

Select highlight printing (red text) |

<ESC> "4" |

Cancel highlight printing |

<ESC> "E" |

Select emphasized printing (bold text) |

<ESC> "F" |

Cancel emphasized printing |

NOAA API

NOAA makes these alerts available through a well-documented public API, which supports several response formats including two variants of JSON and Atom. The Atom feed contains standard fields, so it should be compatible with a RSS feed reader like NetNewsWire, however it isn't because it expects the Accept header to contain application/xml+atom or it defaults to GeoJSON. A simple proxy which sets the Accept header should make this possible though.

I opted for the JSON-LD option, which provides us with hyperlinked @ids and references to other objects, which can be fetched simply by following those links. We can even navigate it in our browser, but we'll see the default GeoJSON format; for instance here are the active alerts. Since the entire API is based on JSON-LD, the GeoJSON response still contains links.

By polling the active alerts endpoint, we can fetch an up-to-date set of alerts for events such as Tornado Warnings, Tornado Watches, Severe Thunderstorm Warnings, etc. Here is an example event pulled from that endpoint:

{

"@id":"https://api.weather.gov/alerts/urn:oid:2.49.0.1.840.0.2cb9a691a88714bdb5bbad42d2e4f414e66cb1d6.001.1",

"@type":"wx:Alert",

"id":"urn:oid:2.49.0.1.840.0.2cb9a691a88714bdb5bbad42d2e4f414e66cb1d6.001.1",

"areaDesc":"Faulkner, AR; Pulaski, AR; Saline, AR",

"geometry":"POLYGON((-92.67 34.51,-92.73 34.55,-92.47 34.9099999,-92.14 34.67,-92.67 34.51))",

"geocode":{"SAME":["005045","005119","005125"],"UGC":["ARC045","ARC119","ARC125"]},

"affectedZones":["https://api.weather.gov/zones/county/ARC045","https://api.weather.gov/zones/county/ARC119","https://api.weather.gov/zones/county/ARC125"],

"references":[],

"sent":"2025-04-20T18:31:00-05:00",

"effective":"2025-04-20T18:31:00-05:00",

"onset":"2025-04-20T18:31:00-05:00",

"expires":"2025-04-20T19:15:00-05:00",

"ends":"2025-04-20T19:15:00-05:00",

"status":"Actual",

"messageType":"Alert",

"category":"Met",

"severity":"Extreme",

"certainty":"Observed",

"urgency":"Immediate",

"event":"Tornado Warning",

"sender":"w-nws.webmaster@noaa.gov",

"senderName":"NWS Little Rock AR",

"headline":"Tornado Warning issued April 20 at 6:31PM CDT until April 20 at 7:15PM CDT by NWS Little Rock AR",

"description":"TORLZK\n\nThe National Weather Service in Little Rock has issued a\n\n* Tornado Warning for...\nSouthwestern Faulkner County in central Arkansas...\nCentral Saline County in central Arkansas...\nCentral Pulaski County in central Arkansas...\n\n* Until 715 PM CDT.\n\n* At 630 PM CDT, a severe thunderstorm capable of producing a tornado\nwas located over Haskell, or near Benton, moving northeast at 35\nmph.\n\nHAZARD...Tornado.\n\nSOURCE...Radar indicated rotation.\n\nIMPACT...Flying debris will be dangerous to those caught without\nshelter. Mobile homes will be damaged or destroyed.\nDamage to roofs, windows, and vehicles will occur. Tree\ndamage is likely.\n\n* Locations impacted include...\nAlexander... Otter Creek...\nHiggins... College Station...\nNatural Steps... Cammack Village...\nSouthwest Little Rock... Bauxite...\nIronton... Argenta...\nQuapaw Quarter... Vimy Ridge...\nHillcrest Neighborhood... Chenal Valley...\nWar Memorial Stadium... Bryant...\nPinnacle Mountain State Park... Maumelle...\nThe Heights... Shannon Hills...",

"instruction":"TAKE COVER NOW! Move to a basement or an interior room on the lowest\nfloor of a sturdy building. Avoid windows. If you are outdoors, in a\nmobile home, or in a vehicle, move to the closest substantial shelter\nand protect yourself from flying debris.",

"response":"Shelter",

"parameters":{"AWIPSidentifier":["TORLZK"],"WMOidentifier":["WFUS54 KLZK 202331"],"eventMotionDescription":["2025-04-20T23:30:00-00:00...storm...240DEG...30KT...34.54,-92.64"],"maxHailSize":["0.00"],"tornadoDetection":["RADAR INDICATED"],"BLOCKCHANNEL":["EAS","NWEM"],"EAS-ORG":["WXR"],"VTEC":["/O.NEW.KLZK.TO.W.0091.250420T2331Z-250421T0015Z/"],"eventEndingTime":["2025-04-21T00:15:00+00:00"],"WEAHandling":["Imminent Threat"],"CMAMtext":["NWS: TORNADO WARNING in this area til 7:15 PM CDT. Take shelter now. Check media."],"CMAMlongtext":["National Weather Service: TORNADO WARNING in this area until 7:15 PM CDT. Take shelter now in a basement or an interior room on the lowest floor of a sturdy building. If you are outdoors, in a mobile home, or in a vehicle, move to the closest substantial shelter and protect yourself from flying debris. Check media."]},

"replacedBy":"https://api.weather.gov/alerts/urn:oid:2.49.0.1.840.0.79caba517735181c1a45b17add84f4df80cd7466.001.1",

"replacedAt":"2025-04-20T18:55:00-05:00"

}

Under description and instructions, we can see text which is likely meant for the computerized voice synthesis program used by the NWS weather radio broadcasts. Most of this message is the same between similar events, however the "located over Haskell, or near Benton, moving northeast at 35 mph" is not present elsewhere in the message. We can speculate that it is built from lookup tables based on the eventMotionDescription information. We can also see specific subsections mentioned "Southwestern Faulkner County", "Central Saline County", which are possibly created by analyzing how the geometry polygon intersects these regions; the affectedZones only gives us a county-level list.

Program

I've been learning and using the Acme editor lately, part of plan9port, and as part of that I've been using the rc shell to write shell scripts. As outlined in the paper, rc solves some of the issues which make using bash a pain, notably the rules around quoting and handling spaces in variables. I'll introduce the program in pieces.

Our goal is to print alerts which are:

- currently active,

- pertinent to us, or local to our geographic area,

- and haven't been printed before.

For the first, we leverage the /alerts/active endpoint.

curl \

--silent \

--fail-with-body \

--show-error \

--header 'User-Agent: (weather.connor.zip, weather@connor.zip)' \

--header 'Accept: application/ld+json' \

https://api.weather.gov/alerts/active?area=AR

For the second, we first pass ?area=AR to the API (as seen above), and then use jq to filter using the affectedZones array after we've determined our zone URL, mine is Pulaski County or https://api.weather.gov/zones/county/ARC119.

... \

| jq \

--arg pulaski_zone $pulaski_zone \

--raw-output \

'.["@graph"][] | select(.affectedZones[] | contains($pulaski_zone))'

For the third, we use a combination of jq wrangling and join. First, we output each entry as a sorted, tab separated file containing two fields: the value of @id, and the full JSON representation of the entry. We store the file in a state directory, with the name new:

... \

| jq \

--arg pulaski_zone $pulaski_zone \

--raw-output \

'.["@graph"][] | select(.affectedZones[] | contains($pulaski_zone)) | "\(.["@id"])\t\(.)"' \

| sort \

>state/new

Next, we join the new entries with a file of previously seen @ids, print lines with keys only found in the new file (previously unseen), and drop the key column to create a ndjson formatted stream.

if (! test -f state/seen)

touch state/seen

join -v1 -t ' ' state/new state/seen \

| cut -f2

Now that we have our set of alerts, we can format them for printing using the escape codes mentioned above:

| jq \

--raw-output \

'"\u001b4\u001bE\(.event)\u001b5\u001bF\r\nFrom: \(.effective)\r\nUntil: \(.expires)\(.description | [scan("(was|were) located ([^\\.]+.)")] as $location | if ($location | length > 0) then $location[0][1] | gsub("\n"; " ") | sub("(?<a>^[a-z])"; "\(.a|ascii_upcase)") | "\r\nLocated: \(.)" else "" end)\r\n"'

We need to dive into this jq query a bit. The first line is easy enough:

\u001b4\u001bE\(.event)\u001b5\u001bF\r\n

The unicode sequence for ESC (0x1b) is \u001b, it is followed by 4, E, then the value of event which is our header, e.g. "Tornado Warning", then 5, F, which disable the first two respectively. The line is terminated by \r\n. The next two lines are just as simple, but we have some complex logic for description.

\(.description | [scan("(was|were) located ([^\\.]+.)")] as $location | if ($location | length > 0) then $location[0][1] | gsub("\n"; " ") | sub("(?<a>^[a-z])"; "\(.a|ascii_upcase)") | "\r\nLocated: \(.)" else "" end)

All this happens within a \() which is how expressions are interpolated into strings, we pipe the value of description, a long text field, into scan. The scan function applies a regular expression and emits the capture groups as a stream. In our case, the regular expression (was|were) located ([^\\.]+.) matches text like "as located over Haskell, or near Benton, moving northeast at 35 mph," stopping at the first period. The first parenthetical is used to group the or of "was" and "were." The second wraps a regular expression which uses a inverse character class containing only a . (escaped with \, which is itself escaped with \) -- this matches one or more characters which are not a period. Followed by the metacharacter ., which could match any character but must be a period in this case. We wrap this entire scan in an array [...] -- this will either be [["was", "over Haskell, or near Benton, moving northeast at 35\nmph."]] on a match (doubly nested) or [] on no match.

To capture all the matches for each input string, use the idiom

[ expr ], e.g.[ scan(regex) ]. If the regex contains capturing groups, the filter emits a stream of arrays, each of which contains the captured strings.

We set this to a variable so it can be referenced multiple times in the rest of the rule, $location. Next, we check the length of the array -- if there is a match it will be length one (one array of matches), otherwise zero. In the case we have matched to some location information, we replace any newlines with spaces using gsub, then we replace the first character with its uppercase representation using sub and a named capture group a. Finally, we add a new line with our location information preceded by a field name, otherwise we print no line at all.

Finally, we use our own tcpw command, which simply writes to a TCP socket at a given address. Think nc or dial from plan9port, but which doesn't wait for the connection to close.

... \

| tcpw --address star-sp700.home.arpa:9100

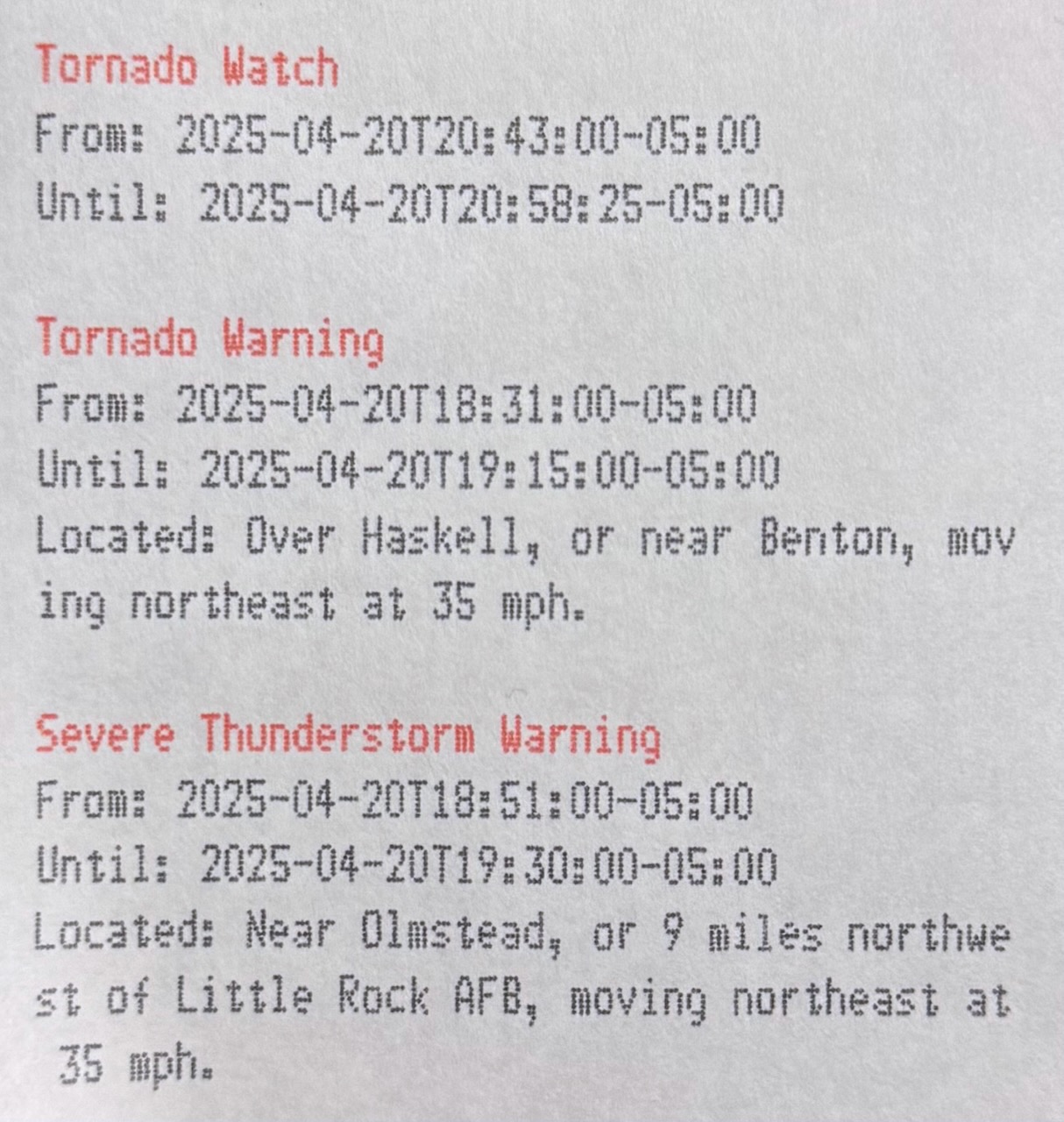

The result looks like this:

Putting it all together, see alerts on Github.

Deploying

Since we use the rc shell, we need to include plan9port in our docker image. Doing so is fairly straightforward using the golang image for alpine, simply install a few prerequisites, clone the repository, add the bin to your $PATH, and run the install script:

FROM golang:1.24.2-alpine3.21

RUN apk add --no-cache \

jq \

curl \

git \

build-base \

linux-headers \

perl

# Install plan9port (works as of 9da5b4451365e33c4f561d74a99ad5c17ff20fed)

ENV PLAN9=/usr/src/plan9port

ENV PATH="$PATH:$PLAN9/bin"

WORKDIR /usr/src/plan9port

RUN git clone https://github.com/9fans/plan9port.git . && \

./INSTALL

My standard deploy script works as follows:

#!/usr/bin/env rc

flag e +

flag x +

tag=`{git rev-parse --short HEAD}

image='us-south1-docker.pkg.dev/homelab-388417/homelab/weather'

# Build image

docker buildx build --platform linux/amd64 . --tag $image:$tag

docker tag $image:$tag $image:latest

docker push --quiet $image:$tag

docker push --quiet $image:latest

yq 'setpath(["spec", "template", "spec", "containers", 0, "image"]; "'$image:$tag'")' <k8s/deployment.yaml | kubectl apply -f -

We first grab the current commit hash to use for a tag, then we build and push the image. Finally, we use yq to replace the container image and pass to kubectl for application to the cluster.

In Acme, ensure BUILDKIT_PROGRESS=plain is set so that the output can be seen clearly in win.